Data-driven learning is an approach that uses information in a systematic way to improve teaching processes, considering the importance of addressing learning challenges in a scientific manner. In this sense, adopting a flexible methodology that takes advantage of the benefits of using data in decision-making will lead to the continuous improvement of educational processes.

Data-driven learning is an approach that uses information in a systematic way to improve teaching processes, considering the importance of addressing learning challenges in a scientific manner. In this sense, adopting a flexible methodology that takes advantage of the benefits of using data in decision-making will lead to the continuous improvement of educational processes.

Successfully implementing a data-driven strategy can lead to a more effective educational methodology by allowing instructors to tailor their teaching to the individual needs of users. In addition, it also helps to identify areas for improvement and to make better decisions. More specifically, a good interpretation of data allows for the assessment of learners’ needs, strengths, progress and performance, as well as the analysis of classroom instruction.

In the field of Education and Vocational Training, Virtual Reality simulators have emerged as a powerful tool to recreate safe, controlled and realistic environments. Their ability to provide immersive experiences allows students to practice complex tasks without risk, but their true potential lies in something that often goes unnoticed: the ability to generate meaningful data by recording students’ interactions, allowing their performance to be closely monitored.

How can we apply data-driven learning to training with Virtual Reality?

Each session in a VR simulator produces an enormous amount of data: time spent on each task, mistakes made, routes taken, decisions made under pressure and many other performance indicators. This flow of information can be overwhelming if not managed correctly. Therefore, it is not enough to simply collect data; it is essential to transform it into relevant information to improve learning.

For example, a forklift simulator can record whether the learner has correctly positioned the forks, but also how many unnecessary manoeuvres they have made or whether they have prioritised safety over speed. These details, properly analysed, can identify patterns and areas for improvement that would otherwise go unnoticed.

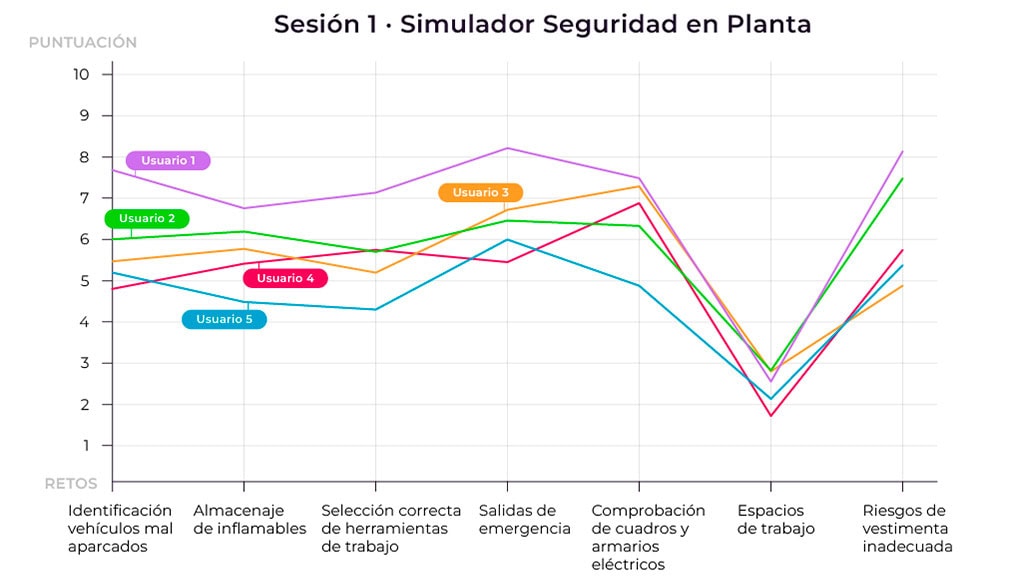

Analysis of the data obtained during simulator practice can help to identify areas in which students excel, either by strength or by need for improvement.

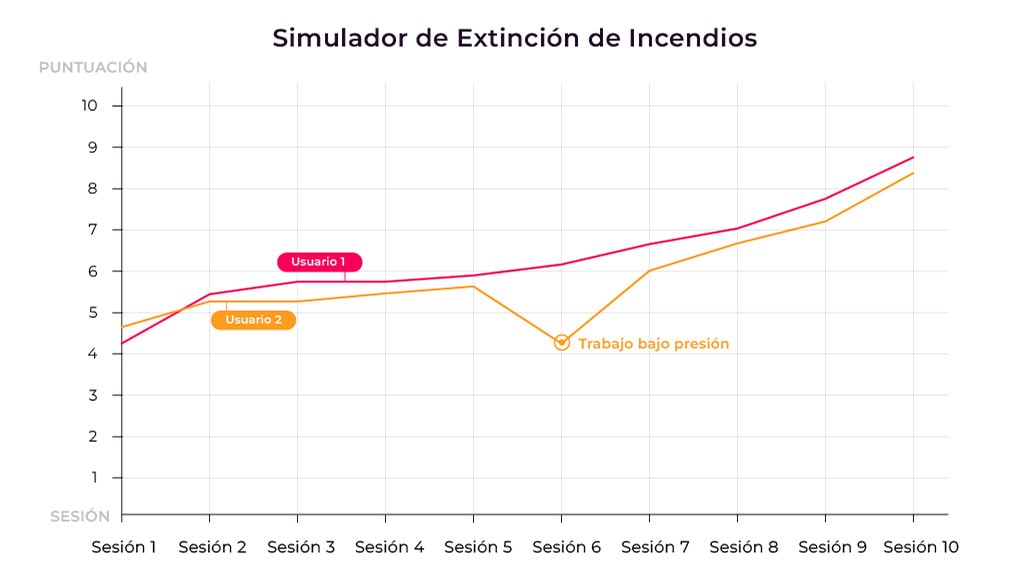

In order to identify this, it is not sufficient to obtain the results of a single training session, but it is essential to measure the variability in the learner’s performance in different sessions. For example, if a learner consistently performs well on a simulator task, this will be identified as a strength. If, on the other hand, there is inconsistent improvement, it will be considered improvable.

But it is also necessary to contextualise this variation in performance, which will allow the identification of patterns and trends. For example, a student may show an improvement in performance on a particular task in a normal situation, and show poor performance on the same task in high-pressure situations or when time is limited.

Improvements can be measured in terms of speed, accuracy or efficiency. Once there is no longer sustained improvement, it may be time to introduce variations in the type of tasks or introduce new complexities.

Analysis of assessment data over time can also provide a measure of overall learner progress. VR simulators facilitate the task of assessing the ability to apply what has been learned in a real-world context.

This can be particularly useful for long-term training, where it is important to be able to demonstrate that learners are improving and developing their skills. This will involve defining clear and specific goals at the beginning of the learning process and then assessing the extent to which these goals are being met. Ideally, these goals should be personalised to the needs and abilities of the learner.

It is important to understand that the above points are not independent. Identifying strengths and weaknesses can inform patterns and trends that, in turn, affect how we assess overall student progress. In addition, the use of assessment data should be an ongoing process, where immediate feedback and adjustment of the teaching approach are key to improving learning effectiveness.

On the other hand, for the data to have a real impact on training, it must be analysed globally, allowing comparisons to be made between learners. For example, if several users make mistakes at a specific point in a simulation, analysis could reveal whether this is due to a lack of prior training or a confusing design of the virtual scenario. These findings allow trainers to make adjustments to optimise learning.

Data analysis also opens the door to a more personalised approach to teaching. By monitoring each student’s progress in detail, it is possible to identify their strengths and areas for improvement, adapting content and challenges in a targeted way.

For example, a learner who has already mastered certain tasks might advance more quickly to more complex levels, while another who is struggling would receive additional feedback and more opportunities to practice. This type of dynamic adaptation maximises learning effectiveness and ensures that each learner gets the most out of their time in the simulator.